GREENSBORO, N.C. — The photos on your phone, they belong to you because your phone belongs to you.

However, if you upload those photos to the iCloud to store them, well, the iCloud belongs to Apple, and chances are you clicked ‘I Accept’, which means you’ve given Apple access to your photos. The company is now planning to scan those photos looking for images linked to child sex abuse.

“It applies an algorithm that they built to make sure the explicit picture doesn't make its way to the internet. Every image you've got will be scanned by their filtration system. It doesn't mean that someone from Apple is going to come in and look at every single image that you have, and flag you and send you as a sexual predator that's not how it works,” says Tech Expert Kent Meeker.

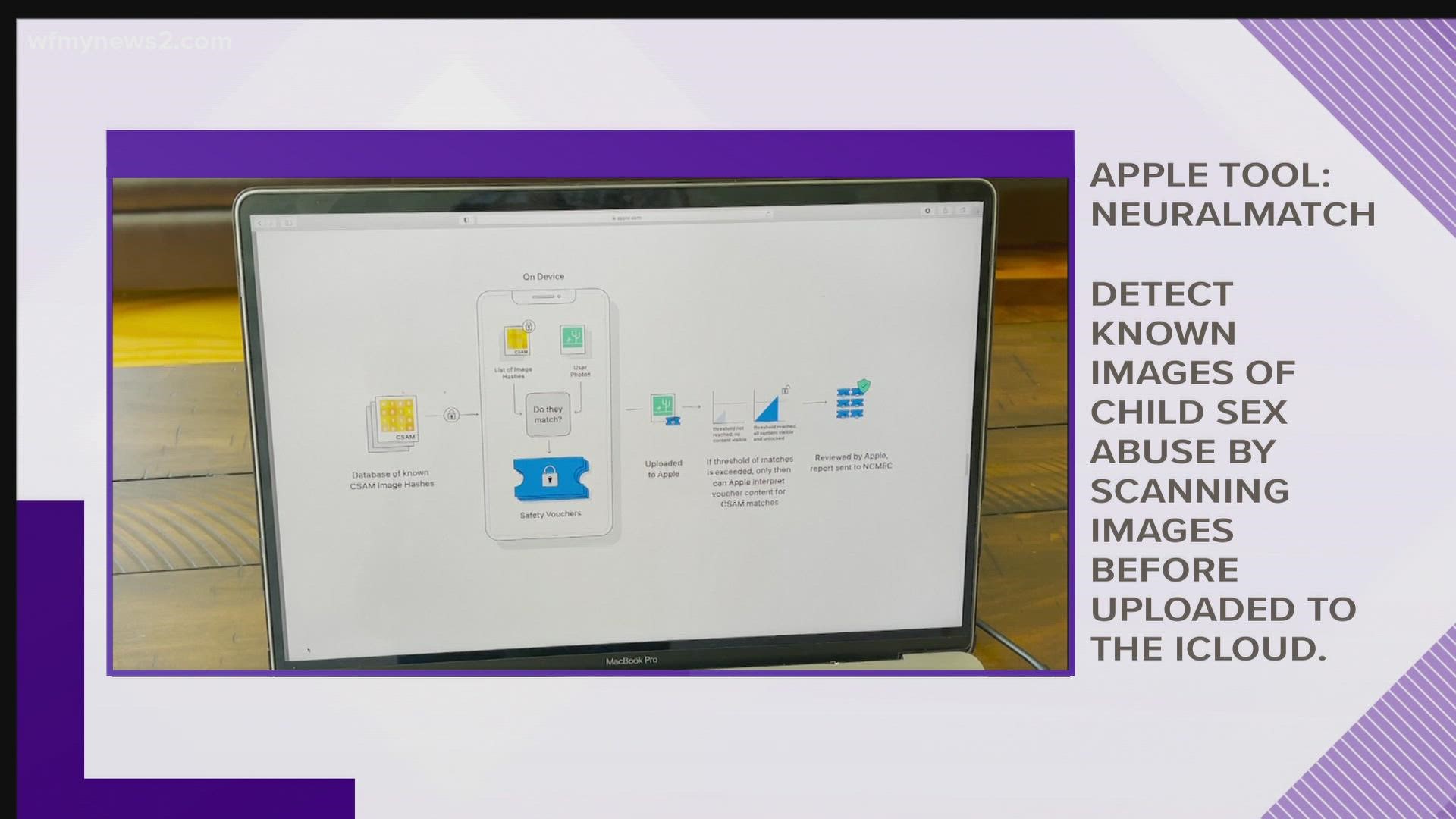

The tool Apple calls "NeuralMatch" will detect known images of child sexual abuse by scanning images on iPhones, iPads, and various apple computers before they are uploaded to iCloud.

If it finds a match, the image will be reviewed by a human. If child pornography is confirmed, the user's account will be disabled, and the National Center for Missing and Exploited Children notified.

Apple is also planning to scan messages for sexually explicit content --- the detection system will flag images that are known as child pornography and in the center's database.

“Right now you can be talking on the phone and a message can come across and that can happen with your child and you don't know what you don't know,” says Meeker.

In order to receive warnings about sexually explicit images on your children's devices, parents will have to enroll their child's phone. Right now we understand kids over 13-years-old could possibly unenroll, meaning parents of teenagers won't get notifications. As we learn more about this new software technology, we'll keep you updated.

According to the Associated Press and CBS News, Apple said neither feature would compromise the security of private communications, but researchers say the matching tool, which looks for these images doesn’t actually view the image as much as the pinpoints identifiers that show up in known abuse images.

Tech companies including Microsoft, Google, Facebook and others have for years been sharing digital fingerprints of known child sexual abuse images. Apple has used those to scan user files stored in its iCloud service, which is not as securely encrypted as its on-device data, for child pornography.

Apple has been under government pressure for years to allow for increased surveillance of encrypted data. Coming up with the new security measures required Apple to perform a delicate balancing act between cracking down on the exploitation of children while keeping its high-profile commitment to protecting the privacy of its users.